Context

Functional tests are a best practice in the application development process—used to provide a way for end users to test application functionality and suggest desired features.

Oftentimes, application developers need to test a particular user flow, mimic interactions, and validate expected outcomes of an application’s user interface (UI)—and functional tests are how they do all of that.

Two of the best benefits from functional tests within an organization:

-

Developers have more confidence that their code aligns well with the product specifications.

-

Quality assurance (QA) engineers can use black-box testing techniques to properly validate an application without needing to understand the internal application logic.

Functional testing is different from unit testing, although they are both essential processes. Unit testing describes how an application behaves by testing the individual modules within the codebase. Functional testing, on the other hand, only cares about what an application does, without considering the underlying implementation.

Hitting the Ground Running with Selenium

In the early years of the C3 AI Applications Engineering team, we developed an in-house functional testing framework to support our internal and external (customer) testing needs.

Specifically, we needed a framework that:

-

Was integrated with the C3 AI Type System to ensure APIs could be understood and properly mocked.

-

Would be automatically handled in our continuous integration pipelines to enable seamless regression testing.

Our first framework for writing application functional tests was a Selenium-based UI testing framework called Skywalker. To run these tests, users would have to run a Selenium server, and Skywalker would translate a test’s calls to itself into APIs that could be understood by Selenium to simulate user interactions within a browser.

This was beneficial for the use cases listed above, since developers that were already familiar with the C3 AI Type System APIs could use the existing documentation to write their tests using such patterns. They did not have to worry about switching contexts and ensuring that user interactions were called in such a way that Selenium could understand, as all that logic was abstracted away from them.

Challenges Arise

For a while, Skywalker served our use cases well, but over time, we started to have some issues:

- Like many Selenium-based testing frameworks, timing was a recurrent issue with Skywalker that caused instability in our tests. The synchronicity of Selenium meant that we relied on applying waiting periods and timeouts within our tests. This became a critical pain point over time because, often, not enough time was allotted for these periods, causing tests to periodically fail.

- Running Selenium alongside our server was quite an expensive process and requiring our external developers to do so as well to be able to test their applications was not ideal.

It’s important to note that there is a reason Selenium is so widely used within industry and was our first choice for user interface testing as well: its usage of the WebDriver API means that it is well-documented, has a broad community of users, and is actively developed. However, given the issues we were facing, we wanted to see if we could find an alternative.

Let’s Keep It Simple: The Browser

Before considering an alternative, we first needed to know what benefit Selenium was providing so we could duplicate the underlying function another way without the long wait times.

At its core, Selenium (and WebDriver underlying it) is providing an abstraction layer that allows a process to simulate browser interactions or, in other terms, drive a web browser. WebDriver translates Selenium’s API calls into native browser function calls. Through our investigations, we realized that all we really needed was a way to call native browser APIs to simulate user interactions in an application. If we could do this while maintaining the same type-system aware test writing paradigms that our users were already familiar with, they wouldn’t even notice the difference.

The Changing of the Guard: From Skywalker to Luke

Our team re-worked Skywalker to remove the dependency on Selenium. The resulting framework, Luke, was entirely new and came with a host of new capabilities.

-

Our new framework was asynchronous by nature. We no longer had to create waiting periods and hope we configured them to be long enough. Using a Promise-based approach, Luke was built to retry operations to make tests more resilient to environment-dependent differences in performance. For instance, if a test looked to click on a specific button after a web page was initially loaded, Luke would retry the operation until page load was completed without requiring the test writer to manually specify a waiting period for the page load.

-

We used Web Workers to allow communication with the server-side type system from within a browser. This would ensure that tests could still use the same familiar C3 AI Type System APIs as before, but they now ran alongside the main thread of the browser.

-

We built a complementary playground environment for users to write or upload their tests in the browser. Through our playground, users can execute their tests, which will be run in a separate browser window controlled by the playground.

Luke in Action

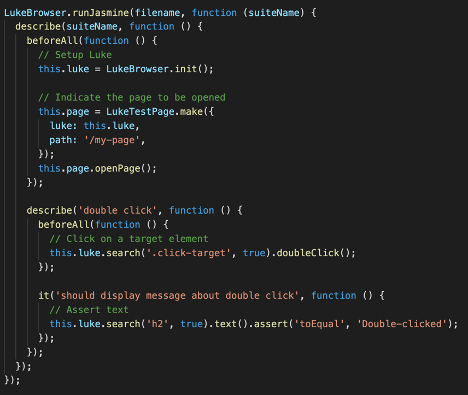

The image above is a simple example of a Luke test, structured using the familiar Jasmine development framework. In the beforeAll block, the Luke framework is initialized in a single init command, and the page we are testing is opened by calling openPage. Subsequently, we tell Luke to search for particular target elements and conduct interactions (e.g., double-clicking) or generate assertions (e.g., on the text content).

Notice that there are no user-specified waiting periods; Luke will automatically retry each of the search operations for a default timeout period of 30 seconds and will fail the step if the operation does not succeed within the allotted time. The user can provide a different timeout value as an optional third parameter to the search method should they wish.

Test Writing: As No-Code as Possible

Our playground interface was developed to make test writing as simple as possible. Since Luke simplified our framework to rely on native browser APIs, we realized that we should also be able to record user interactions from a web page that uses those same APIs.

We developed a recording feature for no-code test authoring that would track user interactions in a target window and generate a Luke test based off them.

We also developed a browser extension alongside our playground that facilitated communication between the target window and our playground to track recorded interactions. Users can record all native browser interactions (e.g., clicks, inputs, drag-and-drops) and generate assertions to validate expected behaviors.

Users can also define user stories for their tests to ensure they are well-structured and the expected user-flows of a product are sufficiently covered so failures can be clearly traced to user impact.

Conclusion

We hope that our journey through testing has been insightful. We are actively working to make the testing experience better and simpler to provide our users with the utmost confidence they deserve in their applications. Stay tuned for more updates, and happy testing.

About the Author

Omid Afshar is a software engineer on the Applications Engineering team at C3 AI. He holds a bachelor’s degree in computer science at the University of California, Berkeley, and a master’s in computer science from the University of Illinois at Urbana-Champaign. Omid enjoys hiking, traveling the globe, and watching NFL football (go Niners!).